Topic 4: Federated models and machine learning algorithms coming from different domains than those involved in TERMINET for testing, validating, and demonstrating their performance in the TERMINET use case

TOPIC4 Patron: UOWM

Introduction

Nowadays, organisations are in need of implementing smart agents for customers and customising user experiences to upgrade their status and maintain their presence in the market. By applying digital transformation principles, such as big data analytics and predictive maintenance analytics, businesses can effectively optimise their supply chain with insights and predictions. The deployment of Artificial Intelligence (AI) technology in the IoT domain is an effective way for implementing such intelligent services.

TERMINET offers effective synergies of IoT and AI able to create a dynamic, where companies are able to gain high quality insight into every piece of data, from what customers are actually looking at and touching to how employees, suppliers, and partners are interacting with different aspects of the IoT ecosystem. TERMINET clearly contributes to the Next Generation IoT vision by moving decisions closer to the end user, while also considering current operational limitations and strictly adhering to the sensitive data preservation principles. A key technology enabler for this task is Federated Learning (FL).

TERMINET adopts the concept of FL to provide efficient distributed AI at the edge by using machine and deep learning algorithms to enable training and inferencing directly on devices like sensors, drones, actuators, and gateways etc. As a result:

- the latency between events is significantly reduced, e.g., a detected signal or the response of a device to the signal,

- there is no need to rely on connectivity anymore and the data can be processed where they are created or very close to that

- since the data stay at the edge privacy is ensured, which is complemented by additional privacy methodologies within the Federated system,

- generalization of models is supported since the produced models train on data from multiple sources.

FL is an emerging technology and a new concept to machine learning. It is an enabler that has immense potential to transform a variety of NG-IoT application domains [6]. Being one of TERMINET’s next generation cutting edge technologies, FL technology will be validated and demonstrated in six proof-of-concept, realistic use cases in compelling IoT domains such as the energy, smart buildings, smart farming, healthcare, and manufacturing.

The aims of this topic is the design and development of new types of models, model architectures, algorithms and deployment and optimization techniques that can provide novel and optimized FL solutions to the environments and requirements described by TERMINET.

Functional Requirements

The TERMINET project will employ smart solutions having embedded intelligence, connectivity and processing capabilities for edge devices relying on real-time processing at the edge of the IoT network – near the end user. However, the employment of distributed AI and FL faces significant challenges and demands specific requirements to be met, regardless of the application domain [7], [8].

- Regarding federated networks, where various privacy concerns are raised, communication is a critical bottleneck, since it requires data being generated on each device to remain local. It is essential to develop communication-efficient methods that iteratively send small messages or model updates as part of the training process. A process such as this is necessary in order to fit a model to data generated by the devices in the federated network. Another way to reduce communication is to minimise the total number of communication rounds or to decrease the size of transmitted messages in each round.

- Another challenge of FL refers to the variability in hardware, network connectivity and power in federated networks. Furthermore, according to the size of the network, as well as the software constraints of each device, only a small number of devices may be active in a specific time period aiming to conserve energy, resulting to the creation of an unreliable communication path. Newly developed FL methods should be able to expect a low amount of participation, tolerate heterogeneous hardware, and be robust to dropped devices in the network.

- Statistical heterogeneity is another major challenge of FL networks. This issue refers to the non-identical data distribution across devices, both in terms of modelling the data, and in terms of analysing the convergence behaviour of associated training procedures. A strong federated system should be capable to operate on the global data distribution by utilising personalised or device-specific modelling, regardless of the way the learning process takes place locally in each user’s private data ecosystem.

- FL enables data privacy on each device by only sharing model updates instead of the raw data. Nevertheless, it has been proven that training model updates can also disclose sensitive information, either to a third-party, or to the central server. There have been quite recent attempts towards enhancing the privacy of FL using tools such as secure multiparty computation or differential privacy. However, the employment of such mechanisms that may provide data privacy often result in reduced model performance or system efficiency. It is of paramount importance to understand and balance these trade-offs, both theoretically and empirically, towards realizing private federated learning systems.

Technical Requirements

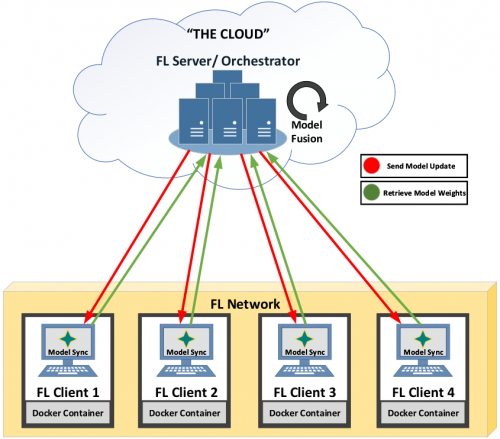

TERMINET will develop a distributed Federated Learning Framework (FLF), where model training is distributed across a number of edge nodes enabling privacy by design and efficiency in terms of training and processing requirements. Within FLF, the edge nodes use their local data to train advanced machine learning model required by the core AI machine, located at the IoT platform. This architecture allows the data to stay with the user eliminating the need for data transmission and storage to the IoT platform. The edge nodes then send the model updates instead of the raw data to the core AI machine for aggregation.

Figure 2: TERMINET’s centralised FL architectural approach [9]

To further support and validate the services of TERMINET’s FLF, as well as to enrich the overall TERMINET platform, additional machine learning algorithms and federated models coming from different domains should be implemented and accordingly trained to fit the TERMINET use cases. Among different variants of the federated learning, noteworthy is federated transfer learning (FTL) that allows knowledge to be transferred across domains that do not have many overlapping features and users [10]. In FTL, training with heterogeneous data may present additional challenge, e.g., not all client data distributions may be adequately captured by the model. Furthermore, quality of a particular local data partition may be significantly different from the rest. On the other hand, Vertical Federated Learning (vFL) is another technique that allows multiple parties that own different attributes (e.g. features and labels) of the same data entity (e.g. a person) to jointly train a model [11]. TERMINET use cases can greatly benefit from either newly developed or pre-trained Machine Learning (ML) models, enhancing the robustness, reliability and accuracy of TERMINET’s predictive analytics and distributed AI capabilities.

Topic 4 winner

Title: Next Generation PersonaLized EDGE-AI HealthCare

Acronym: Next Gen PLEDGE

Learn more about Topic 4 winner

References

- [Yang2019] Q. Yang, Y. Liu, T. Chen, and Y. Tong, ‘Federated Machine Learning: Concept and Applications’, ACM Trans. Intell. Syst. Technol. 10, 2, Article 12 (February 2019), 19 pages, 2019, DOI: https://doi.org/10.1145/3298981

- [Li2020] Li, T. et al. ‘Federated Learning: Challenges, Methods, and Future Directions.’, IEEE Signal ProcessingMagazine, vol. 37, no. 3, 2020, pp. 50–60.

- [Xia2021] Q. Xia, W. Ye, Z. Tao, J.Wu and Q.Li, ‘A Survey of Federated Learning for Edge Computing: Research Problems and Solutions’, High-Confidence Computing (2021), doi: https://doi.org/10.1016/j.hcc.2021.100008

- [Saha2021] S. Saha and T. Ahmad, ‘Federated Transfer Learning: concept and applications’, 2021, https://arxiv.org/abs/2010.15561

- [Sun2021] J. Sun, X. Yang, Y. Yao, A. Zhang, W. Gao, J. Xie and C. Wang, ‘Vertical Federated Learning withoutRevealing Intersection Membership’, 2021, https://arxiv.org/abs/2106.05508

- 11. J. Sun, X. Yang, Y. Yao, A. Zhang, W. Gao, J. Xie and C. Wang, ‘Vertical Federated Learning without Revealing Intersection Membership’, 2021, https://arxiv.org/abs/2106.05508